Day 12: Implementing YOLO for Object Detection

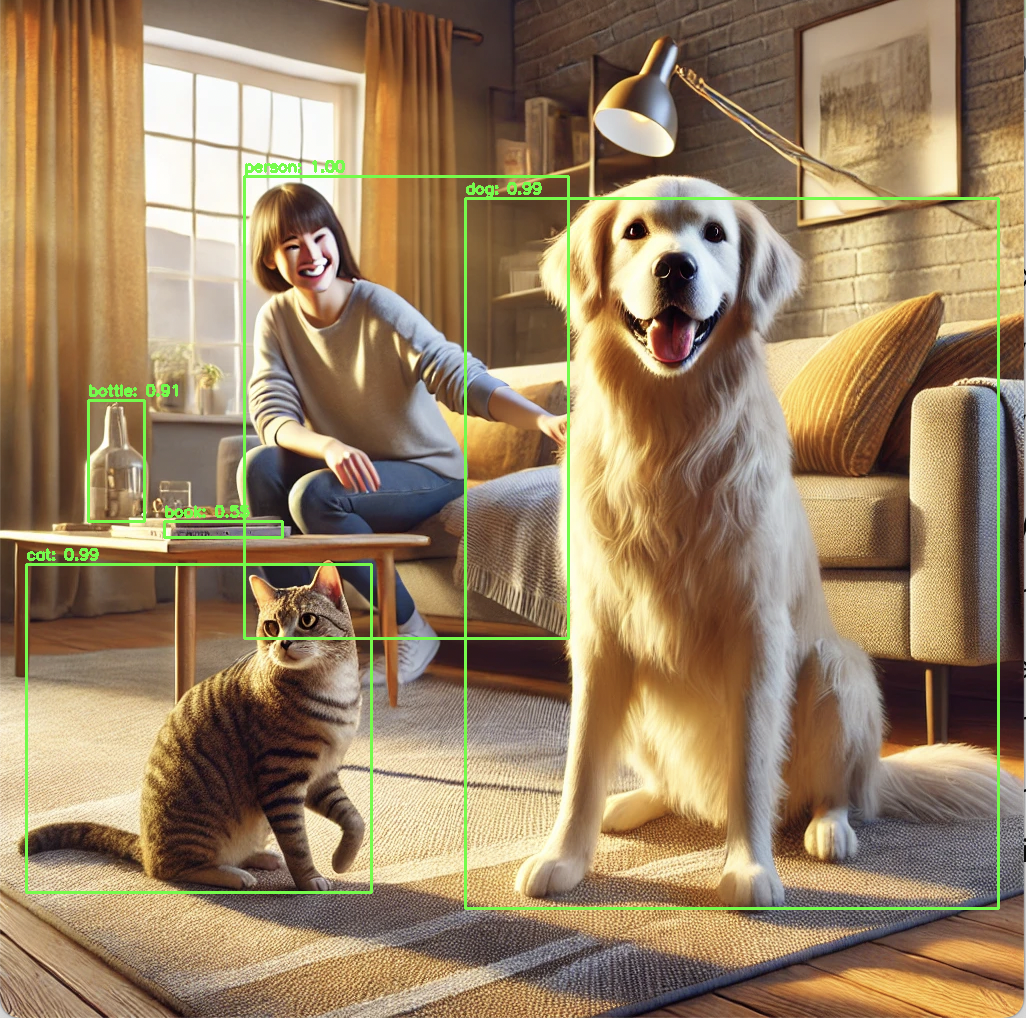

Day 12’s challenge is about implementing YOLO (You Only Look Once) for object detection. YOLO is one of the most well-known and widely used real-time object detection models due to its efficiency and speed.

Since this is your first time diving into YOLO, we’ll take a tutorial-based approach and focus on understanding the core concepts and getting a simplified implementation up and running.

Overview of YOLO (You Only Look Once)

- YOLO is a popular object detection model that can identify and locate multiple objects in a single image with a single forward pass.

- It works by dividing an image into a grid and predicting bounding boxes and class probabilities for each section of the grid.

- YOLOv3 and YOLOv4 are widely used versions, but YOLOv5 is the most approachable for beginners due to its simplicity and open-source implementation.

Objective

- Use a pre-trained YOLO model to perform object detection.

- Instead of training YOLO from scratch (which requires substantial computational power), we’ll use a pre-trained model and run it on a sample image or video.

Steps to Implement YOLO Using a Tutorial Approach

We’ll use YOLOv3 or YOLOv5, depending on simplicity and available resources. The easiest way is to use a pre-trained model and perform inference on sample images. We’ll use the OpenCV library with a pre-trained YOLOv3 model.

Step 1: Set Up the Environment

First, let’s set up the environment. We will need:

- OpenCV to load and display images.

- YOLO weights and configuration files.

- Python packages like NumPy for general processing.

You can install the required packages using:

pip install opencv-python-headless numpy

Step 2: Download YOLO Weights and Configuration

YOLOv3 uses two main files:

- Weights file (“yolov3.weights”) contains the pre-trained parameters.

- Configuration file (“yolov3.cfg”) describes the architecture of the YOLO model.

You can download them from the following sources:

Additionally, you’ll need the COCO dataset class names (“coco.names”) file, which contains the names of the 80 classes that YOLOv3 is trained to detect:

- coco.names: Download

Step 3: Implementing YOLO for Object Detection

Using OpenCV and a pre-trained YOLOv3 model, we’ll perform object detection on an image.

Full Python Code

import cv2

import numpy as np

# Load YOLOv3 weights, configuration, and COCO class names

weights_path = "yolov3.weights"

config_path = "yolov3.cfg"

names_path = "coco.names"

# Load the COCO class names

with open(names_path, "r") as f:

class_names = f.read().strip().split("\n")

# Load the YOLOv3 model

net = cv2.dnn.readNetFromDarknet(config_path, weights_path)

# Set the model to use CPU or CUDA if available (comment if only CPU is used)

net.setPreferableBackend(cv2.dnn.DNN_BACKEND_OPENCV)

net.setPreferableTarget(cv2.dnn.DNN_TARGET_CPU)

# Load the input image

image = cv2.imread("sample.jpg") # Replace 'sample.jpg' with your image file path

(height, width) = image.shape[:2]

# Create a blob from the input image

blob = cv2.dnn.blobFromImage(image, scalefactor=1/255.0, size=(608, 608), swapRB=True, crop=False)

net.setInput(blob)

# Get the output layer names

layer_names = net.getLayerNames()

output_layer_names = [layer_names[i[0] - 1] for i in net.getUnconnectedOutLayers()]

# Perform forward pass to get output from the output layers

layer_outputs = net.forward(output_layer_names)

# Initialize lists to hold the bounding boxes, confidences, and class IDs

boxes = []

confidences = []

class_ids = []

# Loop over each output layer's detections

for output in layer_outputs:

for detection in output:

scores = detection[5:] # Get the scores for all classes

class_id = np.argmax(scores) # Get the class ID with the highest score

confidence = scores[class_id] # Get the highest score (confidence)

if confidence > 0.5: # Filter out low confidence detections

# Scale the bounding box back to the size of the image

box = detection[0:4] * np.array([width, height, width, height])

(centerX, centerY, box_width, box_height) = box.astype("int")

# Get the top-left corner coordinates

x = int(centerX - (box_width / 2))

y = int(centerY - (box_height / 2))

# Save the box, confidence, and class ID

boxes.append([x, y, int(box_width), int(box_height)])

confidences.append(float(confidence))

class_ids.append(class_id)

# Apply Non-Maxima Suppression to suppress weak and overlapping bounding boxes

indices = cv2.dnn.NMSBoxes(boxes, confidences, score_threshold=0.5, nms_threshold=0.4)

# Draw the bounding boxes and class labels on the image

for i in indices.flatten():

(x, y) = (boxes[i][0], boxes[i][1])

(w, h) = (boxes[i][2], boxes[i][3])

color = (0, 255, 0) # Green color for bounding box

cv2.rectangle(image, (x, y), (x + w, y + h), color, 2)

text = f"{class_names[class_ids[i]]}: {confidences[i]:.2f}"

cv2.putText(image, text, (x, y - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.5, color, 2)

# Show the output image

cv2.imshow("YOLO Object Detection", image)

cv2.waitKey(0)

cv2.destroyAllWindows()

Detailed Explanation of the Code

Load YOLO Configuration and Weights:

net = cv2.dnn.readNetFromDarknet(config_path, weights_path)

- This loads the YOLO configuration and weights files, initializing the network and preparing it for inference.

Prepare the Input Image:

blob = cv2.dnn.blobFromImage(image, scalefactor=1/255.0, size=(608, 608), swapRB=True, crop=False)

net.setInput(blob)

- “blobFromImage()” converts the image into a blob, the format that YOLO expects.

"scalefactor=1/255.0"normalizes pixel values to [0, 1].- The image is resized to (608, 608), which is the input size expected by YOLOv3.

Perform Forward Pass:

layer_outputs = net.forward(output_layer_names)

- “forward()” performs a forward pass through the network, generating detections that include class scores and bounding boxes.

Extract Information from the Detections:

for output in layer_outputs:

for detection in output:

scores = detection[5:] # Get the scores for all classes

class_id = np.argmax(scores) # Get the class ID with the highest score

confidence = scores[class_id] # Get the highest score (confidence)

- This part loops over all detections to extract class IDs, confidences, and bounding boxes for each detected object. A confidence threshold is applied to filter out weak detections.

Non-Maxima Suppression (NMS):

indices = cv2.dnn.NMSBoxes(boxes, confidences, score_threshold=0.5, nms_threshold=0.4)

- NMS removes overlapping bounding boxes for the same object, keeping only the box with the highest confidence.

Draw Bounding Boxes:

cv2.rectangle(image, (x, y), (x + w, y + h), color, 2)

cv2.putText(image, text, (x, y - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.5, color, 2)

Bounding boxes and class labels are drawn on the image.

Running the Code

To run the code successfully:

- Place the following files in the same directory as your script:

- “yolov3.weights”

- “yolov3.cfg”

- “coco.names”

- An image file (e.g., “sample.jpg”) for testing.

After running the script, you should see a window displaying the image with detected objects and bounding boxes.