Deep Learning FAQ

Activation Functions:

1. ReLU (Rectified Linear Unit)

What is ReLU?

- ReLU stands for Rectified Linear Unit.

- Think of it as a function that passes positive values as they are, and stops negative values (turns them into zero).

Simple Example - Turning Off the Negative Signal:

Imagine you’re listening to a music player. The volume knob can go from -10 to +10, where negative values mean turning the volume down (even making it mute) and positive values mean turning it up. However, ReLU doesn’t care about the negative — it just ignores all negative values and keeps positive values as they are.

- If the volume knob is at +7, ReLU will let 7 through.

- If the volume knob is at -5, ReLU will say, “Nope, it’s negative,” and turn it into 0 (muted).

In Neural Networks:

ReLU acts as a gate. If the input is positive, ReLU lets it pass; if it’s negative, it blocks it and turns it into 0. This helps the network learn quickly because it only needs to deal with positive signals or zeros, and it avoids problems caused by too many small negative values.

Summary of ReLU:

- Positive input: Let it through as-is.

- Negative input: Turn it into 0.

- Analogy: It’s like a music volume knob that ignores negative adjustments and lets positive adjustments through.

2. Sigmoid

What is Sigmoid?

- The Sigmoid function squashes input values into a range between 0 and 1.

- It’s useful when you want to decide something in a probabilistic way, for example, “Is this true or not?” because 0 and 1 represent extremes.

Simple Example - Light Dimmer:

Imagine a light dimmer in a room. You have a knob to control the brightness of the light, but the light can only be between off (0) and full brightness (1).

- If you turn the knob slightly, you get a value close to 0 (dim light).

- If you turn it more, you get something close to 1 (bright light).

- If the knob is in the middle, you get a partial value, like 0.5 (medium light).

In Neural Networks:

Sigmoid takes the input, and squeezes it between 0 and 1. It’s often used in the output layer for binary classification, where you need to determine if something is one category or another (e.g., cat or no cat).

Summary of Sigmoid:

- Input range: Squashes everything between 0 and 1.

- Use case: When you want to output something in the form of probabilities.

- Analogy: It’s like a light dimmer that smoothly adjusts brightness between off (0) and fully on (1).

3. Tanh (Hyperbolic Tangent)

What is Tanh?

- Tanh stands for Hyperbolic Tangent.

- It’s very similar to Sigmoid, but instead of outputting values between 0 and 1, it outputs between -1 and 1.

- This is helpful because it’s centered around zero, which makes learning easier for some neural networks.

Simple Example - Thermometer:

Imagine you have a thermometer that can measure both cold and hot temperatures, with the following scale:

- Negative values mean cold temperatures.

- Positive values mean hot temperatures.

- Zero means the temperature is neutral (neither hot nor cold).

- If it’s cold, the thermometer shows a negative value like -0.7.

- If it’s hot, it shows a positive value like +0.8.

- If it’s a neutral temperature, it shows 0.

In Neural Networks:

- Tanh lets values be both positive or negative. If a neuron wants to strongly say “this input is important in a positive way,” it outputs a high positive value (close to +1). If it wants to say, “this input has a negative effect,” it outputs a value close to -1.

- This means Tanh is good for situations where being able to indicate both strong positive and negative signals is useful.

Summary of Tanh:

- Output range: Values between -1 and 1.

- Use case: Useful for representing signals that can be either positive or negative, centered around zero.

- Analogy: It’s like a thermometer showing values between cold (negative), neutral (zero), and hot (positive).

What are Optimizers?

Optimizers are like guides that help your neural network find the best solution. Imagine your neural network is a hiker trying to find the lowest point in a hilly landscape (representing the minimum loss). The optimizer is the strategy or tool the hiker uses to get to the lowest point as quickly and efficiently as possible.

Optimizer 1: SGD (Stochastic Gradient Descent)

Simple Analogy: A Hiker Taking Small Steps Down a Hill

- Imagine you’re a hiker trying to reach the lowest point in a valley. You can only see the part of the hill right around you, and you take steps downward in the direction that looks like it leads lower.

- With SGD, each step is like taking a small, careful move down the hill based on the current slope you feel under your feet.

Characteristics of SGD:

- Careful Steps: You only take steps based on local information. If the ground feels like it’s sloping down, you take a step that way.

- Slow Progress: Because you’re taking small steps and sometimes relying only on what’s directly around you, you may not always find the fastest path down.

- Momentum: You can improve SGD by adding momentum, which is like letting the hiker pick up some speed when going downhill. This helps you avoid getting stuck on small bumps and can lead you more directly to the lowest point.

In a Neural Network:

- SGD works by adjusting each parameter (weight) a little bit at a time in the direction that reduces error.

- It’s straightforward but can be slow, especially when dealing with complex landscapes where there are lots of ups and downs (e.g., in deep networks).

Optimizer 2: Adam (Adaptive Moment Estimation)

Simple Analogy: A Hiker with Adaptive Gear and Memory

- Imagine the same hiker again, but this time you’re equipped with some special gear:

- You have adaptive boots that can adjust based on how steep the terrain is.

- You also have a notebook to remember the directions you’ve tried before.

Characteristics of Adam:

- Adaptive Steps: The boots can adjust their step size based on how steep the slope is. If it’s a gentle slope, they take larger steps. If it’s steep, they take smaller, careful steps.

- Memory: The notebook helps you remember the directions you’ve tried before. If you notice that you’ve been heading downhill steadily, you keep going in that direction with more confidence.

In a Neural Network:

- Adam combines the advantages of momentum (like remembering where you’re going) and adaptive learning rates (like adjusting the step size).

- It’s a smart hiker that adapts based on the terrain, making it both fast and effective.

- That’s why Adam is often a favorite choice for training — it adjusts well, speeds up when it’s safe, and slows down when it needs to be careful.

Optimizer 3: RMSprop (Root Mean Square Propagation)

Simple Analogy: A Hiker with Shock Absorbers to Smooth the Descent

- Imagine the hiker again, but this time with shock-absorbing shoes. These shoes help you move steadily downhill without bouncing too much, even when the terrain gets a bit rocky.

- RMSprop is all about keeping the journey smooth and preventing big jumps that could lead you in the wrong direction.

Characteristics of RMSprop:

- Smooth Descent: The shock absorbers help smooth out the steps so that you don’t make large jumps that could lead to getting stuck or accidentally going the wrong way.

- Short Memory: Unlike Adam, which remembers a lot, RMSprop focuses on the recent terrain and makes adjustments based on that.

In a Neural Network:

- RMSprop adjusts the step size based on the recent history of the gradients.

- It’s especially useful when the terrain (i.e., the loss landscape) is bumpy, which often happens in deeper neural networks.

- It helps ensure that steps are not too big, preventing the network from missing the optimum point.

When to Use Which Optimizer?

-

SGD:

- Best for simpler tasks or when you want more control over the learning process.

- Works well when combined with momentum.

- Good if you don’t need adaptive learning and have a good learning rate already.

-

Adam:

- The most popular choice for many deep learning problems.

- Works well for almost all use-cases, particularly when training deep networks.

- It’s fast and usually converges to a good solution with less tuning.

-

RMSprop:

- Similar to Adam, RMSprop is great for complex, deep networks where the terrain is uncertain.

- It’s a good choice when you need steady training without large jumps.

Overfitting Techniques:

What is Overfitting?

- Overfitting occurs when a model learns the training data too well, including its noise and irrelevant details, and fails to generalize to new, unseen data.

- It’s like a student who memorizes every page of a book instead of understanding the main concepts — they might do well in a test with the exact same questions but will struggle when the questions are different.

Two techniques to prevent overfitting are Dropout and L2 Regularization.

1. Dropout - An Easy Explanation

What is Dropout?

- Dropout is a technique where, during training, we randomly turn off some neurons in the network.

- In simple terms, dropout forces the network to learn more robust features instead of relying on just a few neurons to make predictions.

Analogy: A Team Project

Imagine you’re working on a team project with several teammates, and each person has a unique set of skills. If everyone knows that one teammate will always do the most important part (let’s say Sarah always takes the lead), people might start depending on her too much and not learn the other tasks well enough.

But suppose, during a practice run, you decide that Sarah won’t participate. This forces the rest of the team to step up and learn how to complete the project even without her.

- The next time, someone else, maybe John, is not allowed to contribute, forcing everyone else to adjust and learn their part more thoroughly.

The outcome?

- Every member of the team is prepared and has developed a well-rounded set of skills.

- No one person is indispensable, and the project can be completed even if someone is unavailable.

How Dropout Works:

- In a neural network, dropout randomly turns off some neurons in each training iteration.

- The percentage of neurons that are turned off is called the dropout rate. If the rate is 50%, half of the neurons in that layer are turned off.

- By doing this, the model is forced to not rely on just a few neurons and instead distribute learning across many neurons.

Effect of Dropout:

- Better Generalization: Since the model can’t rely too much on specific neurons, it learns more generalized features, improving performance on new, unseen data.

- Prevents Overfitting: Dropout adds randomness and makes the training process less dependent on specific weights, preventing overfitting.

Example in a Neural Network:

-

Imagine a layer of 8 neurons in a network:

[ O O O O O O O O ] <- 8 neurons -

During dropout, if we apply a dropout rate of 50%, it randomly turns off 4 of these neurons:

[ O X O X O O X X ] <- 'X' means turned off -

Only the remaining neurons are active and contribute during that training iteration. In the next iteration, another set of neurons may be turned off randomly.

In Short: Dropout is like randomly telling team members they can’t participate in every practice, forcing everyone else to learn all parts of the project, making the entire team stronger.

2. L2 Regularization - An Easy Explanation

What is L2 Regularization?

- L2 Regularization is a technique where we penalize large weights in the neural network.

- The idea is to keep the weights small so that the model doesn’t rely too heavily on any particular feature.

Analogy: The Minimalist Student

Imagine a student who is studying for a test. The student has a huge set of notes but decides that highlighting every single line will help them remember everything. This approach makes the notes bulky and hard to review effectively — and the student ends up memorizing unnecessary details.

Now, imagine that someone tells the student that they’ll be penalized for highlighting too much. They need to limit themselves to highlighting only the most important parts of their notes.

- The student now has to carefully decide what’s most important, and in the process, they learn the main concepts rather than trying to memorize everything.

How L2 Regularization Works:

- In a neural network, the goal is to find the best weights that minimize the loss.

- L2 Regularization adds a penalty to the loss function based on the magnitude of the weights.

- The idea is to keep weights small, making the network simpler and less likely to overfit.

Mathematically (without going into too much detail):

- The loss function is what the model tries to minimize.

- With L2 Regularization, a penalty term proportional to the sum of the squares of all weights is added to the loss.

- The larger the weights become, the larger the penalty.

Effect of L2 Regularization:

- Prevents Large Weights: L2 discourages weights from growing too large, which can lead to overfitting.

- Smoother Decision Boundary: With smaller weights, the decision boundaries formed by the model are generally simpler and smoother, which helps in generalizing to new data.

Example in a Neural Network:

- Suppose a neural network has learned that certain weights are very large because it thinks those features are extremely important.

- With L2 Regularization, the model tries to keep those weights smaller by adding a penalty to the loss function if weights grow too large.

- The result is that the network learns to spread importance across multiple features rather than relying heavily on just a few.

In Short: L2 Regularization is like telling a student they can only highlight the most important information, preventing them from relying too much on unnecessary details, resulting in more efficient learning.

Visual Example - Dropout vs L2 Regularization

-

Dropout: Imagine a soccer team where different players are randomly asked to sit out during practice games.

- Result: Every player improves overall skills because they can’t rely on specific key players during training.

-

L2 Regularization: Imagine you are carrying a backpack up a mountain, and you have too many unnecessary items in it.

- You get penalized (it becomes too hard to carry) if you try to bring everything, so you decide to bring only essential items.

- Result: You reach the top more efficiently because your backpack is lighter and more manageable.

Both Dropout and L2 Regularization are widely used because they help a model generalize better by either simplifying the model or adding redundancy to the learning process, preventing over-reliance on specific pathways.

What is a Convolutional Neural Network (CNN)?

A Convolutional Neural Network (CNN) is a type of deep learning model specially designed to work with images. CNNs can recognize patterns in images, much like how we use our eyes and brain to recognize faces, objects, and everything around us.

Think of a CNN as a series of layers that each work together to identify features in an image, much like how our brain processes visual information step by step.

Imagine a Simple Example: Recognizing a Cat Picture

Imagine you’re looking at a picture of a cat. How do you know it’s a cat? Well, your brain processes the picture in parts. You might notice the whiskers, the eyes, the ears, and the shape of the face. Similarly, a CNN looks at the picture and breaks it down into parts to decide if it’s a cat.

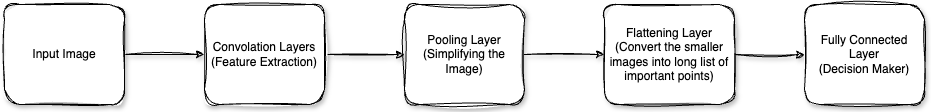

How CNNs Work: Layers that Process the Image Step by Step

A CNN is composed of a series of layers, each working as a specialist to examine different parts of the image, each time getting more detailed. Here’s how it works:

Step 1: The Input Image

An image is like a big grid of numbers. For a color image, each pixel is represented by three values (Red, Green, Blue, or RGB). Imagine an image as a large piece of graph paper filled with numbers, each number representing the brightness of a pixel.

Layers of a CNN: Step-by-Step Analogy

Layer 1: Convolution Layer (Feature Detection)

Convolution is like scanning the image through a small window or filter. Imagine taking a small square magnifying glass and moving it over different parts of the image to look for specific details, like edges.

- Real-Life Analogy: Think of Convolution as a cookie-cutter:

- Imagine you’re trying to find if a cookie has chocolate chips.

- You use a small cookie-cutter tool to move around and find pieces of chocolate.

- This tool keeps moving over different parts of the cookie (image), and each time it detects a chocolate chip, it marks it.

- In CNNs, the filter (or “cookie-cutter”) moves over the entire image and creates a new version of the image, showing where specific features like edges or colors are located.

Layer 2: Pooling Layer (Simplifying the Image)

Pooling simplifies information to make it easier to process. After detecting features, we want to make our image smaller while still keeping the important parts.

- Real-Life Analogy: Imagine you take a photo and then want to keep only the important details.

- Max Pooling is like using a tool to find the most important piece from each small section of the image.

- It reduces the size of the image and focuses on the prominent features.

- It’s like shrinking a picture but still being able to tell what’s in it — we keep the essential details.

Layer 3: Flattening (Converting to a List)

After convolution and pooling, we end up with smaller images that hold key information. Flattening takes this shrunken version of the image and converts it into a list of numbers that can be fed to the final decision layer.

- Real-Life Analogy: Imagine you take all the essential pieces you found in the image and put them in a single row. This step turns all the detected features into a long list of important points.

Layer 4: Fully Connected Layer (Making Decisions)

After flattening, the list of numbers is fed into the Fully Connected Layer, which acts as the decision maker. It looks at the entire list and decides what the image most likely is.

- Real-Life Analogy: Think of the Fully Connected Layer as your brain:

- After seeing whiskers, pointy ears, and a fluffy tail, your brain says: “Aha, this must be a cat!”

- The fully connected layer looks at all the details gathered and then combines them to identify the object.

Putting It All Together:

- Input Image: A picture of a cat is given to the CNN.

- Convolution Layer: The CNN applies filters to detect basic features, like edges or colors.

- Pooling Layer: The network simplifies the image, keeping only the essential information.

- Flattening: It turns the pooled image into a list of key features.

- Fully Connected Layer: The CNN examines the list of features and decides if it’s a cat, dog, bird, or something else.

Why Do CNNs Work Well for Images?

- Local Patterns: Images have local patterns, like the arrangement of pixels in edges or textures. CNNs are excellent at detecting these patterns.

- Layer-by-Layer Detail: As you go deeper into a CNN, the network’s understanding becomes more detailed. Early layers recognize basic shapes (like lines), while deeper layers identify complex features (like eyes or fur patterns).

Example of How CNNs Learn:

Imagine teaching a child to recognize animals in pictures:

- Step 1: Start by teaching them to look for simple shapes — maybe pointy ears, whiskers, and a tail.

- Step 2: Help them combine those shapes into more complex features, like recognizing that pointy ears and a fluffy body mean “cat.”

- Step 3: Finally, they learn to say, “That’s a cat!” by combining all the details.

A CNN does something similar:

- Early layers recognize simple features like edges.

- Middle layers detect patterns and textures.

- Later layers learn to identify complex objects like cats or cars.

Recap with an Everyday Example:

Imagine looking at a puzzle with different parts that come together to reveal a picture:

- Convolutional layers look at each puzzle piece, identifying its shape and color.

- Pooling layers simplify it, keeping only the most important parts.

- Flattening and fully connected layers understand the entire puzzle and finally decide, “This puzzle is a picture of a cat.”

Summary:

- Convolutional Neural Networks (CNNs) are like pattern detectors that scan images in layers.

- Convolutional Layers detect basic features like edges and textures.

- Pooling Layers simplify images by reducing their size but keeping essential details.

- Fully Connected Layers make a decision based on detected features to identify the image.

Visualization to Imagine:

Think of a camera with different filters:

- First, it uses an edge-detecting filter to find outlines.

- Then it simplifies the image, zooming out to keep key parts.

- Finally, it passes this “zoomed-out, highlighted version” to a decision-making brain, which says, “This is a cat.”