AI Helper: Build Your Own Custom LLM Model on Ollama

Introduction:

In the previous article, we learned how easy it is to use Ollama for running large language models on our own computers. This time around, we’re taking things a step further by creating a custom LLM model tailored just for us. Let’s dive into this hands-on guide!

Use Case:

As a Drupal Developer, my day includes coding, reviewing, and debugging - all in a day’s work. But what if I had a reliable assistant to help me out? Someone who could review my code, find bugs, suggest improvements, or even generate new code!

Let’s see how Ollama can make the job easy.

Getting Started with Your Custom Model:

First things first, find a model that’s close to what you need in the Ollama Library. We’ll use ‘codellama’, a model by Meta, openly available LLM to generate and discuss code.

Here’s how we roll with Ollama:

-

Choosing a Model: Look for ‘codellama’ in the Ollama Library. It’s going to be our base model.

-

Selecting Parameters: By default the Ollama pulls the latest tag but if needed, you can select a specific one.

-

Pull the latest tag:

ollama pull codellama -

Pull with specific tag:

ollama pull <model>:<tag>

-

Building an Assistant:

We’re building a custom model DrupalCode Assistant that understands Drupal development to the core. Follow the steps:

- Check out the Model File for codellama using:

ollama show --modelfile codellama - Create your Modelfile and set the parameters like this:

# Modelfile for creating a Drupal Code Assistant

FROM codellama

PARAMETER temperature 0.7

SYSTEM You are a Drupal developer expert, acting as an assistant. You offer help with generating code, reviewing code and providing suggestions, generating unit test cases and explaining code. You answer with code examples when possible.

- FROM (Required): defines the base model name with an optional ’tag’ to run.

- Syntax:

FROM <model name>:<tag>

- Syntax:

- PARAMETER: the parameter instruction defines the parameters that can be set when the model is run.

- Syntax:

PARAMETER <parameter> <parametervalue> - We are using temperature to set the value of the creativity. Increasing the temperature will make the model answer more creatively. (Default: 0.7). Access list of all the valid parameters and values from here.

- Syntax:

- SYSTEM: The SYSTEM instruction sets the custom system message to specify the behaviour of the assistant. We want to our assistant to revolve around Drupal.

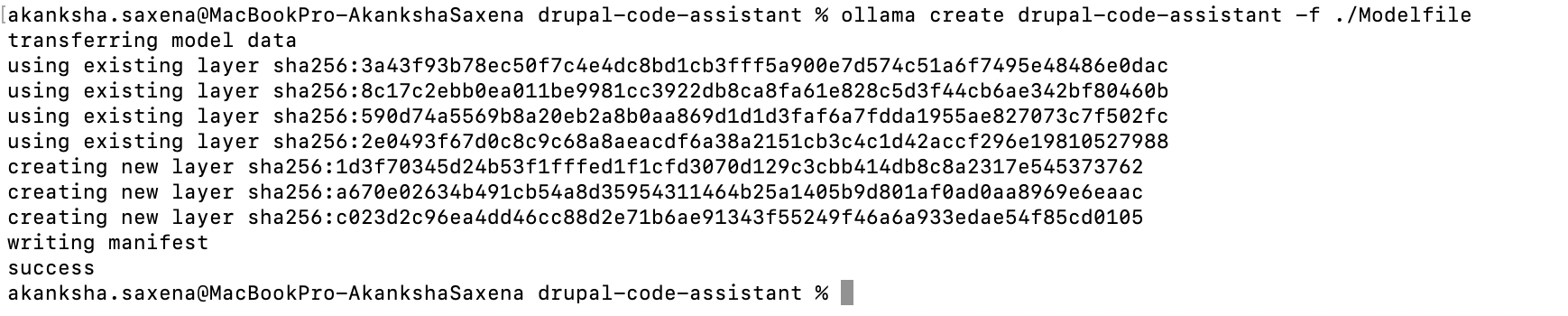

Deploy the model:

Using command ollama create drupal-code-assistant -f ./Modelfile

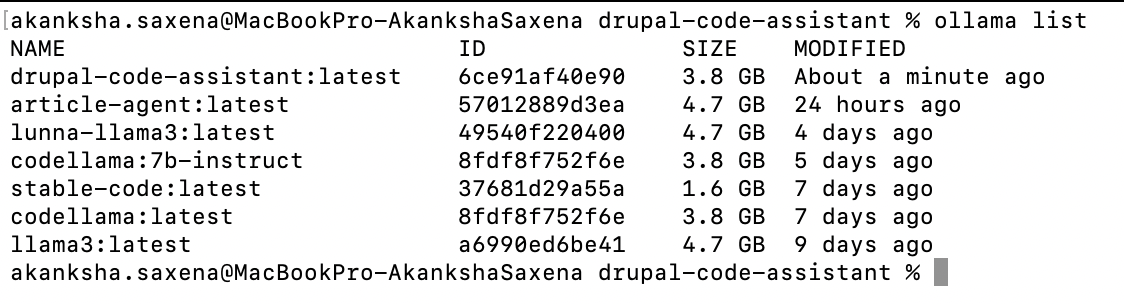

Now that your model has been created, you can check it out by listing all available models with ollama list.

Run the Model:

Get it running using command ollama run drupal-code-assistant

Testing Out Your Model:

Here’s how you can start conversations with your Drupal Code Assistant:

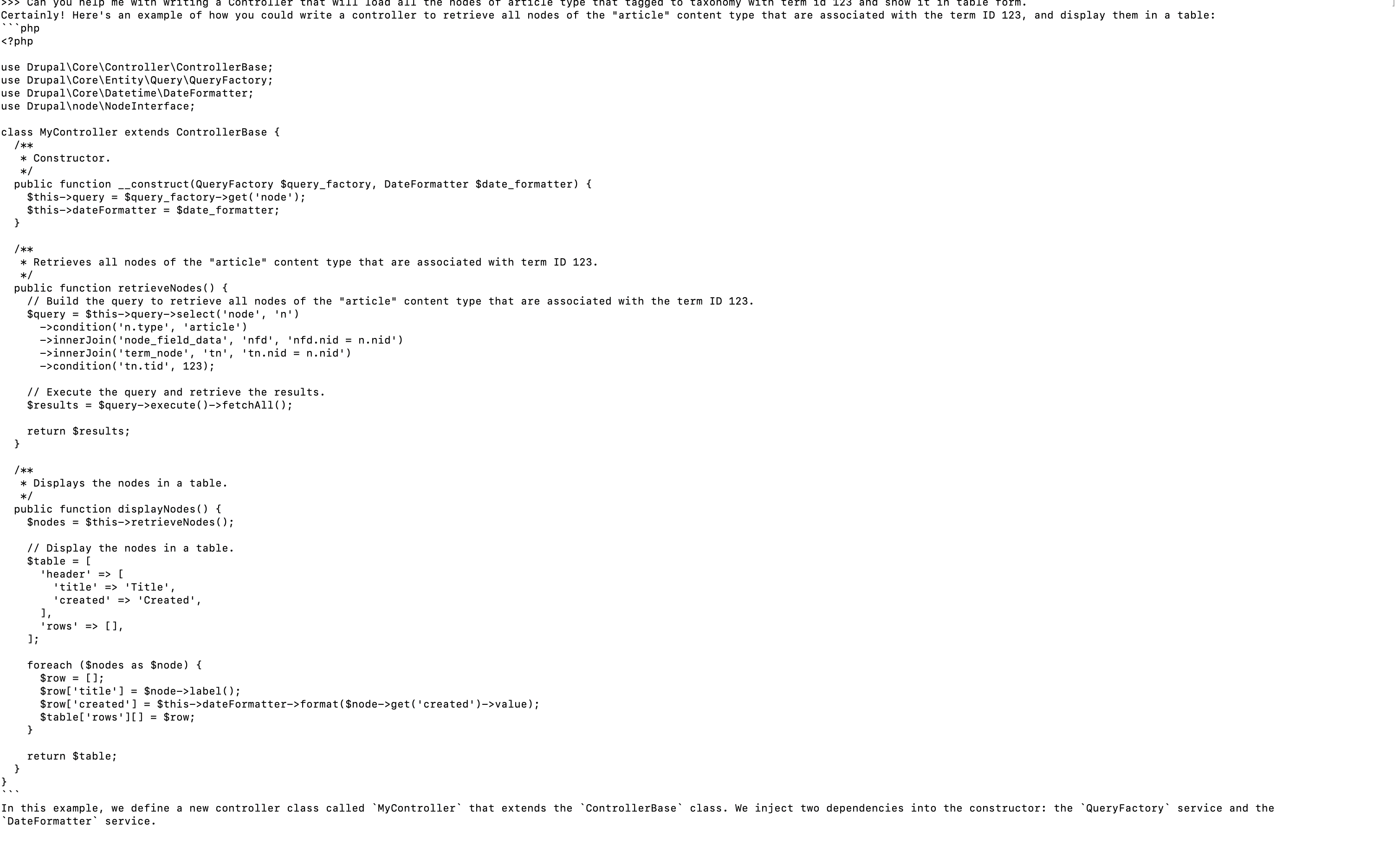

Example 1: Requesting Code

Prompt: Can you help me write a Drupal Controller to display articles tagged with term ID 123 in table format?

Response:

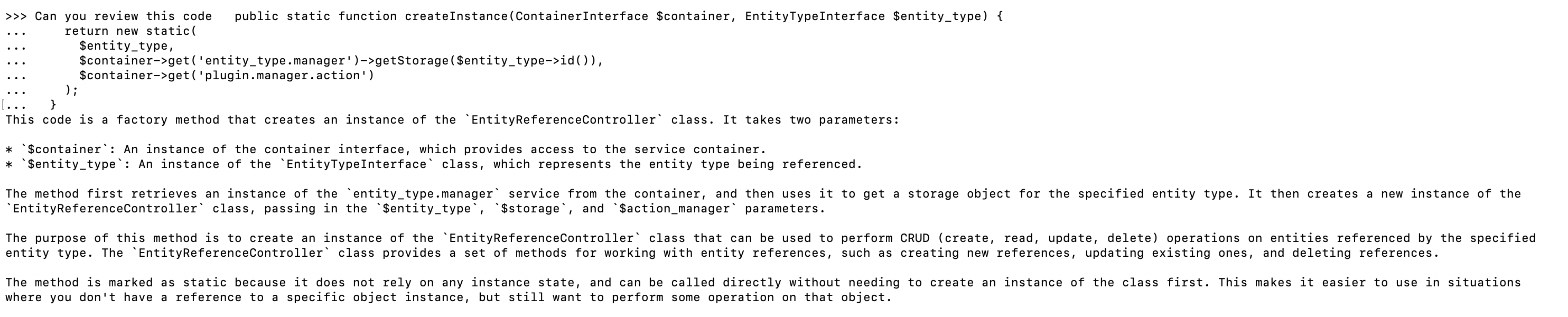

Example 2: Review code.

Prompt: Can you review this code public static function createInstance(ContainerInterface $container, EntityTypeInterface $entity_type) {

return new static(

$entity_type,

$container->get('entity_type.manager')->getStorage($entity_type->id()),

$container->get('plugin.manager.action')

);

}

Response:

Your assistant should now provide responses tailored to the Drupal development context.

Final Thoughts: Remember where we started with simple tasks on Ollama? Now you’ve got a full-on Drupal-savvy assistant right in your local environment. Stay with me, and in the next article, we will create the front-end of this assistant. Until then, enjoy your new assistant!

Video Explaination

If you want video version of this content, check out this video.

Resources Used:

- https://github.com/ollama/ollama/blob/main/docs/modelfile.md

- https://unmesh.dev/post/ollama_custom_model/

- https://www.gpu-mart.com/blog/custom-llm-models-with-ollama-modelfile

- https://copilot.microsoft.com/

Posts in this series

- Drupal Code Assistant: Build Chatbot Using Ollama

- AI Helper: Build Your Own Custom LLM Model on Ollama

- Introducing Ollama: The Local Powerhouse for Language Models