Song Recommender: Building a RAG Application for Beginners From Scratch

Have you been trying to understand RAG and read a bunch of articles, yet feel overwhelmed by how complicated it seems to implement? You’ve just hit the jackpot. In this article, I aim to demystify the RAG concept and demonstrate its utility by building a hands-on song recommender application – all without unnecessary complexity. There’s no need for a deep understanding of AI and machine learning; basic knowledge of Python and a willingness to learn something new are all that’s required. So, without further ado, let’s get started.

To know more about RAG - Read my preious article here

Imagine this: you’re humming a tune or a string of lyrics is stuck in your head. You loved the song and want to stay in the groove by listening to similar songs. That’s exactly where our RAG application comes into play. Just type in the lyrics or mention the genre, and voila! Our application recommends a song that aligns with your input.

Let’s build a small database of songs with their titles and details, where ‘detail’ includes a few lines from the lyrics of the song and its genre.

songs_corpus = [

{"title": "Bohemian Rhapsody", "detail": "lyrics: Is this the real life?; genre: Roc"},

{"title": "Shake It Off", "detail": "lyrics: Players gonna play, hate; genre: Pop"},

{"title": "Thriller", "detail": "lyrics: Cause this is thriller; genre: Pop"},

{"title": "Rolling in the Deep", "detail": "lyrics: There's a fire start; genre: Pop"},

{"title": "Smells Like Teen Spirit", "detail": "lyrics: With the lights out; genre: Gru"},

{"title": "Hotel California", "detail": "lyrics: On a dark desert hwy; genre: Roc"},

{"title": "Sweet Child o' Mine", "detail": "lyrics: She's got eyes blue; genre: Roc"},

{"title": "Wonderwall", "detail": "lyrics: Because maybe, save; genre: Alt"},

{"title": "Billie Jean", "detail": "lyrics: But the kid is not; genre: Pop"},

{"title": "Firework", "detail": "lyrics: Do you ever feel so; genre: Pop"}

]

To find the similarity between the user input and the songs of the corpus, we are going to use Jaccard Similarity.

let’s understand with an example:

Set A: {“I”, “love”, “cats”, “and”, “dogs”}

Set B: {“We”, “love”, “cats”, “not”, “dogs”}

Jaccard Similarity = Intersection(A, B) / Union(A, B)

Intersection(A, B) = common words in A and B = love, cats, dogs = 3 words

Union(A, B) = All unique words in A and B = I, love, cats, and, dogs, we, not = 7 words

Jaccard Similarity = 3 / 7 = 0.42

Let’s code it up.

def tokenize(text):

return set(text.lower().split(" "))

# Mesaures similarity between two data sets.

# Jaccard Index = Intersection (A, B) / Union (A, B)

def jaccard_similarity(query, document):

tokenize_query = tokenize(query)

tokenize_document = tokenize(document)

intersection = tokenize_query.intersection(tokenize_document)

union = tokenize_query.union(tokenize_document)

similarity = len(intersection) / len(union)

return similarity

Now use the jaccard_similarity function to get the song with maximum similarity from our songs corpus.

def get_relevant_document(query):

relavant_song_title = ''

max_similarity = 0

for song in songs_corpus:

detail = song['detail']

similarity = jaccard_similarity(query, detail)

if similarity > max_similarity:

max_similarity = similarity

relavant_song_title = song['title']

return relavant_song_title

Let’s test what we have built so far.

user_input = input("Tell me what are you thinking, i will recommand a song.\n")

relevant_document = get_relevant_document(user_input)

print("I reccomed you to listen: " + relevant_document)

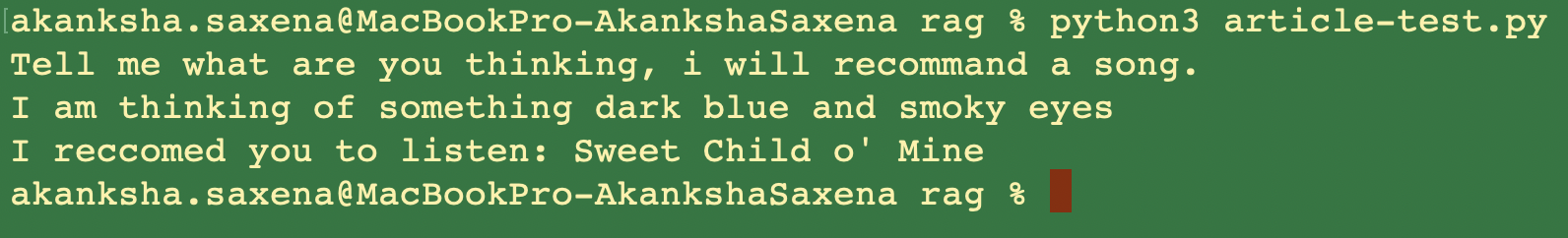

Output:

Congratulations, it works! This process of retrieving the relevant data from your own content is known as Retrieval. Now, let’s test one more example.

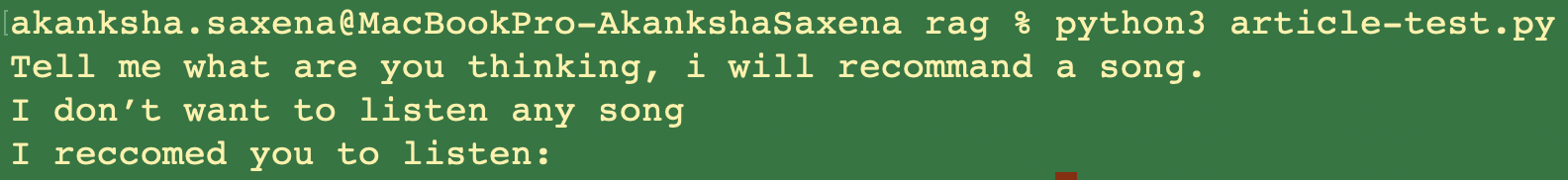

Output:

It did not work with the negative case. That’s where we need a large language model to fallback on.

Itegrate LLM:

Augmentation: We will augment this relevant song with the original user input and prepare a prompt to pass to the LLM for generating a response.

#Augment the relavant song to original query.

prompt = f'''

You are a bot that makes recommendations for songs. You answer in very short sentences and do not include extra information.

This is the recommended song: {relevant_document}

The user input is: {user_input}

Compile a recommendation to the user based on the recommended song and the user input. If the user has no interset simple deny.

'''

Generation

This step is pretty simple: pass the prompt we have generated to the LLM. We are using Ollama to run the LLM (llama3) locally, and Langchain to invoke it.

More about ollama and how to run at local, LINK

Langchain: Langchain is a framework designed to simplify the creation of applications using large language models. Learn more about Langchain.

The code is straightforward: import the LLM package from langchain_community and use its Ollama() method to specify which LLM model you want to use for your application. I am using llama3:latest. Once you have created the llm object, use the invoke method to pass the prompt and invoke the LLM to generate the response. We wrap all of this in the get_response() function.

from langchain_community.llms import ollama

def get_response(prompt):

llm = ollama.Ollama(model="llama3:latest")

response = llm.invoke(prompt)

return response

Final touch: call the get_response and print to the user.

response = get_response(prompt)

print("I reccomed you to listen: " + response)

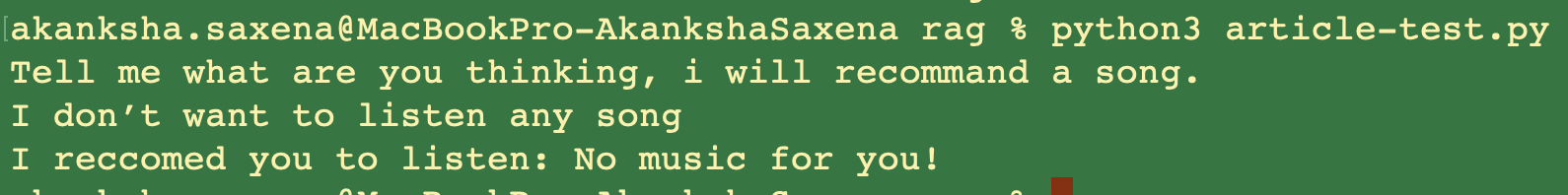

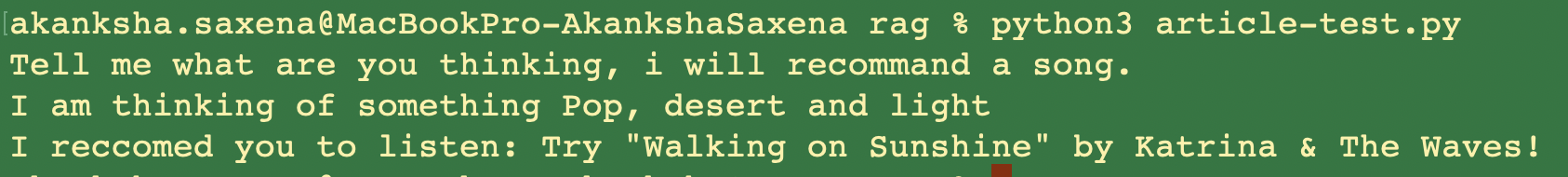

Test it on the same inputs.

Attempt 1:

Attempt 1:

Congratulations! You have built a RAG application from scratch.

In the next article, we will delve a little deeper. Stay tuned!

Full code

from langchain_community.llms import ollama

songs_corpus = [

{"title": "Bohemian Rhapsody", "detail": "lyrics: Is this the real life?; genre: Roc"},

{"title": "Shake It Off", "detail": "lyrics: Players gonna play, hate; genre: Pop"},

{"title": "Thriller", "detail": "lyrics: Cause this is thriller; genre: Pop"},

{"title": "Rolling in the Deep", "detail": "lyrics: There's a fire start; genre: Pop"},

{"title": "Smells Like Teen Spirit", "detail": "lyrics: With the lights out; genre: Gru"},

{"title": "Hotel California", "detail": "lyrics: On a dark desert hwy; genre: Roc"},

{"title": "Sweet Child o' Mine", "detail": "lyrics: She's got eyes blue; genre: Roc"},

{"title": "Wonderwall", "detail": "lyrics: Because maybe, save; genre: Alt"},

{"title": "Billie Jean", "detail": "lyrics: But the kid is not; genre: Pop"},

{"title": "Firework", "detail": "lyrics: Do you ever feel so; genre: Pop"}

]

def tokenize(text):

return set(text.lower().split(" "))

# Mesaures similarity between two data sets.

# Jaccard Index = Intersection (A, B) / Union (A, B)

def jaccard_similarity(query, document):

tokenize_query = tokenize(query)

tokenize_document = tokenize(document)

intersection = tokenize_query.intersection(tokenize_document)

union = tokenize_query.union(tokenize_document)

similarity = len(intersection) / len(union)

return similarity

def get_relevant_document(query):

relavant_song_title = ''

max_similarity = 0

for song in songs_corpus:

detail = song['detail']

similarity = jaccard_similarity(query, detail)

if similarity > max_similarity:

max_similarity = similarity

relavant_song_title = song['title']

return relavant_song_title

user_input = input("Tell me what are you thinking, i will recommand a song.\n")

relevant_document = get_relevant_document(user_input)

#Augment the relavant song to original query.

prompt = f'''

You are a bot that makes recommendations for songs. You answer in very short sentences and do not include extra information.

This is the recommended song: {relevant_document}

The user input is: {user_input}

Compile a recommendation to the user based on the recommended song and the user input. If the user has no interset simple deny.

'''

def get_response(prompt):

llm = ollama.Ollama(model="llama3:latest")

response = llm.invoke(prompt)

return response

response = get_response(prompt)

print("I reccomed you to listen: " + response)

Github Repository

You can find the github repository here

Video Explaination

Posts in this series

- Inside the Codebase: A Deep Dive Into Drupal Rag Integration

- Build Smart Drupal Chatbots With RAG Integration and Ollama

- DocuMentor: Build a RAG Chatbot With Ollama, Chroma & Streamlit

- Song Recommender: Building a RAG Application for Beginners From Scratch

- Retrieval Augmented Generation (RAG): A Beginner’s Guide to This Complex Architecture.